1. Introduction

This white paper shares vision and challenges in testing .NET applications. Never before, has any technology or framework tried bringing many disparate systems and languages under one roof for the benefit of enterprise applications. But with the advent of the .NET architecture we have systems like Visual Basic, COM, Web Services with technologies like XML and SOAP (Simple Object Application Protocol), servers like SQL, BizTalk, etc, delivering enterprise solutions has become easy.

We will examine .NET from several perspectives:

2. .NET architecture for applications

2.1 Application Tiers

Since the beginning of IT, systems designers have faced a number of common problems. The four main areas that any design must address are

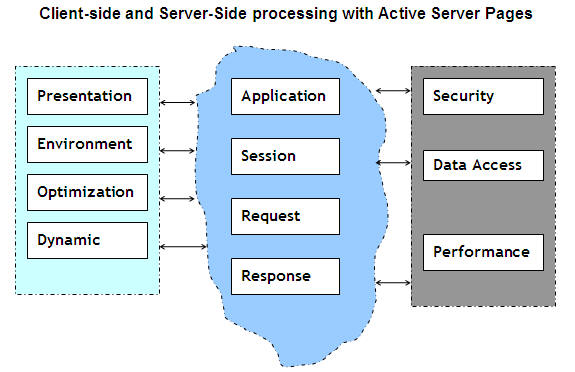

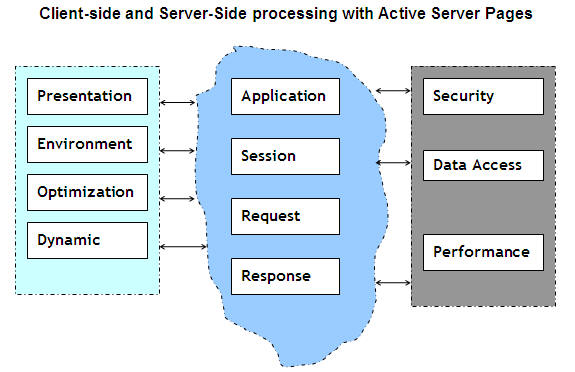

Before .NET existed, there were a number of approaches to building web-based applications. Microsoft provided various development tools and servers that delivered a very useful framework for the design. Microsoft's key technologies included IIS (Internet Information Server), SQL Server, Commerce Server, and Index Server as well as development tools like Visual Studio, InterDev and even Front Page. MTS (Microsoft Transaction Server) and MSMQ (Message Queue) delivered high levels of performance for app servers. ASP's (Active Server Pages) provided the focal point for web page development for dynamic content. The ASP framework provides a conduit between the client browser and the back-end servers. It allowed developers to control many of the key functions of the application (e.g. presentation layer and data access), although ASP's were arguably less capable when it came to separating out the business logic.

An ASP is a dynamic process that runs on the web server. It serves up our web page for the browser to display. It performs a variety of tasks in conjunction with the IIS web server, security model and our underlying data sources before it sends the page to the browser. The client-side browser may also perform some processing although this is typically presentation related. ASP and IIS maintain various objects that allow the client and server to communicate with each other or maintain context (e.g. Request/Response). This model throws some questions to the designers. Do we do everything on the server (which makes it easier to manage) or do we let the client do something (which is better for performance). In reality, we need to do both.

Moving Towards .Net

The older ASP model had some limitations. Developers found it difficult to code an elegant, modular solution. An Active Server Page was a combination of static HTML and programming via one or more scripting languages. Just to display information required large amounts of code, not allowing for database access or calls to business services. Active Server Pages did not have an access path to business services as such, so this meant that our presentation code was tightly bound to data access logic. This could create some considerable code maintenance problems. In addition, the application could be inflexible or have problems in scaling. Developing business services in COM/DCOM ([Distributed] Common Object Model]) overcame some of the issues. However, COM skills were difficult to master and even then, components could not interact directly or run on non-Microsoft platforms.

There was a clear need to address the following development issues:

a) Have a better Application Model built on common Internet standards E.g. HTTP, XML, SOAP.

b) Separate presentation, business logic & data access E.g. Introduce Web Services as identifiable & re-useable components.

c) Bring Visual Basic style productivity to web forms design.

d) Preserve existing developments (ASP and Windows) and move forward to a unified approach. .NET does this through a number of tools, products, servers and the CLR (Common Language Runtime) that make up the complete solution.

.NET introduces new servers like BizTalk that provide high-performance, secure document or data exchange.

XML & SOAP

.NET uses XML & SOAP messages as the communications "glue" between tiers to provide a flexible, accessible, standards-based approach to communications. Their extensive use in the .NET architecture allows designers to build Web Services that run on any platform.

Web Services

Because applications are now collaborative or outward facing, we need a mechanism to expose common business logic. The logic may sit inside our intranet (e.g. lookup employee details) or outside (e.g. perform a credit score via external agency). A Web Service provides a standard way of implementing both requests and can present its interface in an open, public format. Adding new functionality to an application should be simpler in a Web Services-led environment, because of this location independent approach.

3. What does this mean for developers & testing?

3.1 A sample .NET environment

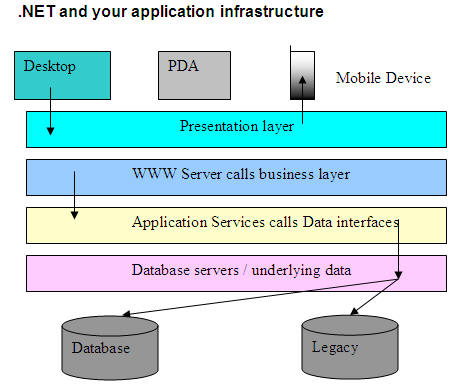

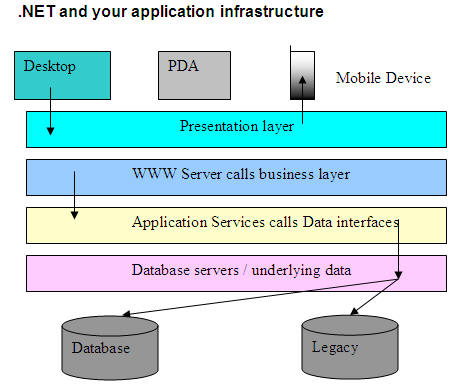

As far as developers are concerned, .NET should make their life a lot easier. Consider the typical infrastructure required to support a serious web-based application. Developers no longer have to write monolithic code inside a single unit or Active Server Page. For example using Visual Studio.NET the presentation logic is now much more compact. New style ASPX pages support the concept of 'code behind'. This means that business logic is separate, but .NET ensures that the two elements communicate effectively. In turn, the business (Web) service may format an XML message that triggers a request in database server. That request may execute as Stored Procedure, which finally accesses the data. Information passes back up the chain until the ASPX renders the information in whatever format our device can handle (HTML, WML etc). Although a .NET application looks identical to existing web-based systems, the .NET environment has some unique characteristics that can impact test management and test development.

3.2 Methodology for .NET testing

Testing .NET requires a methodical approach which draws together test DESIGN, test DATA, PROCESSES, RESOURCES (technical and people) and ANALYSIS tools.

3.2.1 Understanding the development environment/cycle

Testing shouldn't be a separate activity that occurs in isolation from development. However, the connection between them is easily broken. We have shown that .NET has significantly improved solution development in a number of areas such as productivity, separation and cross platform deployment. .NET provides a framework for developing conventional Windows applications too. The array of languages, databases, interfaces and operating systems that make up our complete solution, end-to-end, is very broad. Each development decision may have an impact on our test management and execution (e.g. choosing Stored Procedures as our database access method vs. SQL requests). A strong understanding of .NET, the underlying technical architectures and the development environment provides a much better foundation for meeting and then executing your test strategy objectives.

3.2.2 Approach for Web Services (application components)?

Web Services can exist inside or outside of an organization. Therefore, we need to understand the parameters for acceptable performance. An Intranet service may have a smaller, known user base to contend with, but perhaps a high hit rate if the service is widely used. A service designed for external use (e.g. Company.Customer.CreditCheck) has a much broader usage base and has a very different security and performance profile.

Having understood the performance parameters we also need to understand more about the 'plumbing' that .NET provides. In fact, .NET supports both HTTP and SOAP requests. So, in a performance test we need to know which communication type our clients are using so that we can simulate that load on our system correctly.

Web Services are effectively decoupled from the presentation of data. Therefore, they have no UI (User Interface) of their own. An environment that includes Web Services has to focus on the integrity of those services from both a unit and integration viewpoint.

3.2.3 Points of failure

A typical .NET deployment consists of many servers and services. Each layer or tier is capable of providing monitoring hooks through the CLR. This allows experienced consultants to identify the bottlenecks and performance behavior of components in the .NET system.

Testing .NET requires a deep understanding of the end-to-end architecture. Here are some of the common test activities

4.1 End To End View

Any web-based application, whether or not it uses .NET technology, is rather like an iceberg. Only a small proportion of the supporting infrastructure is actually visible to an end user. The great innovation behind the web was that it turned our processes 'inside-out' to face customers or partners. The potential downside is that the applications (reputation) are only as good as the weakest link in the chain.

4.1.1 Sample .NET application - account maintenance

The web application itself may be doing nothing more than provide account snapshots. The web page server/content resides at the ISP. We will assume the customer has dedicated 2 x 10Mb links from their ISP to connect to their own .NET application server. It utilizes Web Services to provide a variety of account lookup functions (by account id or by customer surname, postcode and house number). The client is experiencing a performance problem when the number of users on the web site is > 200, but they don't know where the cause of the problem lies.

4.1.2 Sample .NET application - the case for end-to-end testing

For this scenario the need for end-to-end testing to flush out potential hot spots in the overall infrastructure is necessary. In our sample environment, it is all too easy for various vendors (the ISP, the Content Management application, the Database Vendor) to say 'it's not me' and even to show some component stats that prove the database can 'handle 200 users'. Our suggested approach in this case might be that, at first, we look at the communications bandwidth and the interfaces between layers. By performing a series of appropriate performance tests and monitoring the behavior of the system from an end-to-end perspective, it is possible to see the big picture.

4.1.3 Sample .NET application - monitoring & statistics

The performance test may replicate real users (web-interface) at the front-end, and introduce a mix of HTTP, SOAP/XML transactions. By collecting relevant statistics from network hardware, database servers, and CPU/Memory/Swap information from the .NET CLR, we should begin to see which units are working to or beyond their capacity.

4.1.4 Sample .NET application - conclusion

In this scenario, we have highlighted just some of the potential issues that may occur in an integrated environment and an approach to solving them. To take a final example, the SOAP protocol used to drive our account lookups is probably a simple, yet slightly effusive interface to decode. If the connections between our web server and the application server are reusable, we get better performance from our network bandwidth. However, the 'system' automatically adds additional security/authorization information, if the connections are not reusable. This means that performance overall, for the same transaction load is worse because of the side effect of the security model. The system needs to send extra data over the network to accomplish the same work. What this shows is that there is a need to have a complete view of the environment. To make sense of your tests and the results requires an understanding of the overall infrastructure and the complex inter-dependencies between systems.

4.2 Testing to be easier in a .NET environment?

Many elements of testing should be simplified because the underlying architecture behind .NET is much more flexible than the ASP-based model. We have seen the launch of new, and improved, third party tools that support the .NET environment. For example, they will support the new .NET Web Controls. They will also focus on interaction with Web Services and the interrogation of component interfaces. In addition, many of the Visual Studio development tools provide 'out of the box' functionality that improves testing and debugging of .NET applications. The new CLR provides many detailed hooks that aid performance monitoring. IS Integration also expects that there will be a need to adapt existing tools to the .NET framework, by generating test harnesses that 'understand' .NET components. It will also require specialist skills to establish the appropriate HTTP, XML/SOAP interfaces required for a performance or stress test environment. .NET highlights the need for an integrated, end-to-end view of testing.

5. Tools for testing in .NET environment

Some of the tools that are available that would help us in testing .NET applications are

This white paper shares vision and challenges in testing .NET applications. Never before, has any technology or framework tried bringing many disparate systems and languages under one roof for the benefit of enterprise applications. But with the advent of the .NET architecture we have systems like Visual Basic, COM, Web Services with technologies like XML and SOAP (Simple Object Application Protocol), servers like SQL, BizTalk, etc, delivering enterprise solutions has become easy.

We will examine .NET from several perspectives:

- First, we will look at how a web-based system needs to support different application views for internal employees, trusted partners, and external customers.

- Next, we examine the architecture that is required to support the system. The architecture consists of different logical tiers. We will see how .NET tiers interact with each other, and how information flows between them.

- Then, we look at the implications that .NET has for all kinds of testing, from both a functional and performance angle. We will consider what it takes to successfully deploy this type of application.

2. .NET architecture for applications

2.1 Application Tiers

Since the beginning of IT, systems designers have faced a number of common problems. The four main areas that any design must address are

- Display or render information according to context and the capabilities of the 'Device'.

- Ensure that Business Model is properly implemented in the systems.

- Retrieve the required information from the data source.

- Ensure the appropriate levels of confidentiality, Integrity and Access are supported.

Before .NET existed, there were a number of approaches to building web-based applications. Microsoft provided various development tools and servers that delivered a very useful framework for the design. Microsoft's key technologies included IIS (Internet Information Server), SQL Server, Commerce Server, and Index Server as well as development tools like Visual Studio, InterDev and even Front Page. MTS (Microsoft Transaction Server) and MSMQ (Message Queue) delivered high levels of performance for app servers. ASP's (Active Server Pages) provided the focal point for web page development for dynamic content. The ASP framework provides a conduit between the client browser and the back-end servers. It allowed developers to control many of the key functions of the application (e.g. presentation layer and data access), although ASP's were arguably less capable when it came to separating out the business logic.

An ASP is a dynamic process that runs on the web server. It serves up our web page for the browser to display. It performs a variety of tasks in conjunction with the IIS web server, security model and our underlying data sources before it sends the page to the browser. The client-side browser may also perform some processing although this is typically presentation related. ASP and IIS maintain various objects that allow the client and server to communicate with each other or maintain context (e.g. Request/Response). This model throws some questions to the designers. Do we do everything on the server (which makes it easier to manage) or do we let the client do something (which is better for performance). In reality, we need to do both.

Moving Towards .Net

The older ASP model had some limitations. Developers found it difficult to code an elegant, modular solution. An Active Server Page was a combination of static HTML and programming via one or more scripting languages. Just to display information required large amounts of code, not allowing for database access or calls to business services. Active Server Pages did not have an access path to business services as such, so this meant that our presentation code was tightly bound to data access logic. This could create some considerable code maintenance problems. In addition, the application could be inflexible or have problems in scaling. Developing business services in COM/DCOM ([Distributed] Common Object Model]) overcame some of the issues. However, COM skills were difficult to master and even then, components could not interact directly or run on non-Microsoft platforms.

There was a clear need to address the following development issues:

a) Have a better Application Model built on common Internet standards E.g. HTTP, XML, SOAP.

b) Separate presentation, business logic & data access E.g. Introduce Web Services as identifiable & re-useable components.

c) Bring Visual Basic style productivity to web forms design.

d) Preserve existing developments (ASP and Windows) and move forward to a unified approach. .NET does this through a number of tools, products, servers and the CLR (Common Language Runtime) that make up the complete solution.

.NET introduces new servers like BizTalk that provide high-performance, secure document or data exchange.

XML & SOAP

.NET uses XML & SOAP messages as the communications "glue" between tiers to provide a flexible, accessible, standards-based approach to communications. Their extensive use in the .NET architecture allows designers to build Web Services that run on any platform.

Web Services

Because applications are now collaborative or outward facing, we need a mechanism to expose common business logic. The logic may sit inside our intranet (e.g. lookup employee details) or outside (e.g. perform a credit score via external agency). A Web Service provides a standard way of implementing both requests and can present its interface in an open, public format. Adding new functionality to an application should be simpler in a Web Services-led environment, because of this location independent approach.

3. What does this mean for developers & testing?

3.1 A sample .NET environment

As far as developers are concerned, .NET should make their life a lot easier. Consider the typical infrastructure required to support a serious web-based application. Developers no longer have to write monolithic code inside a single unit or Active Server Page. For example using Visual Studio.NET the presentation logic is now much more compact. New style ASPX pages support the concept of 'code behind'. This means that business logic is separate, but .NET ensures that the two elements communicate effectively. In turn, the business (Web) service may format an XML message that triggers a request in database server. That request may execute as Stored Procedure, which finally accesses the data. Information passes back up the chain until the ASPX renders the information in whatever format our device can handle (HTML, WML etc). Although a .NET application looks identical to existing web-based systems, the .NET environment has some unique characteristics that can impact test management and test development.

3.2 Methodology for .NET testing

Testing .NET requires a methodical approach which draws together test DESIGN, test DATA, PROCESSES, RESOURCES (technical and people) and ANALYSIS tools.

3.2.1 Understanding the development environment/cycle

Testing shouldn't be a separate activity that occurs in isolation from development. However, the connection between them is easily broken. We have shown that .NET has significantly improved solution development in a number of areas such as productivity, separation and cross platform deployment. .NET provides a framework for developing conventional Windows applications too. The array of languages, databases, interfaces and operating systems that make up our complete solution, end-to-end, is very broad. Each development decision may have an impact on our test management and execution (e.g. choosing Stored Procedures as our database access method vs. SQL requests). A strong understanding of .NET, the underlying technical architectures and the development environment provides a much better foundation for meeting and then executing your test strategy objectives.

3.2.2 Approach for Web Services (application components)?

Web Services can exist inside or outside of an organization. Therefore, we need to understand the parameters for acceptable performance. An Intranet service may have a smaller, known user base to contend with, but perhaps a high hit rate if the service is widely used. A service designed for external use (e.g. Company.Customer.CreditCheck) has a much broader usage base and has a very different security and performance profile.

Having understood the performance parameters we also need to understand more about the 'plumbing' that .NET provides. In fact, .NET supports both HTTP and SOAP requests. So, in a performance test we need to know which communication type our clients are using so that we can simulate that load on our system correctly.

Web Services are effectively decoupled from the presentation of data. Therefore, they have no UI (User Interface) of their own. An environment that includes Web Services has to focus on the integrity of those services from both a unit and integration viewpoint.

3.2.3 Points of failure

A typical .NET deployment consists of many servers and services. Each layer or tier is capable of providing monitoring hooks through the CLR. This allows experienced consultants to identify the bottlenecks and performance behavior of components in the .NET system.

Testing .NET requires a deep understanding of the end-to-end architecture. Here are some of the common test activities

- Unit Testing: The CLR (Common Language Runtime) in .NET allows programs written in any language to co-exist. .NET exposes interfaces between components in a standard way. It is possible to generate test utilities that 'discover' the properties (parameters) and methods (function calls) supported by a component. The utilities can then generate per-unit test harnesses automatically. This speeds up unit testing considerably.

- Integration Testing: How do we test the system end to end? Given the increasingly dissimilar technologies involved, it is likely that system components will be developed separately. How do we simulate some components (e.g. stub our Web Services) before they exist in reality? As with unit testing we can develop integrated test harnesses. By adding business logic to these test components, units can be linked together and even tested ahead of development (this is known as extreme coding).

- Functional Testing: The functionality of new style Web Services can be tested using a black box approach. This means sending the relevant HTTP or XML/SOAP requests and checking that we get the right response back from the Web Service. We also need to test the format of that response (e.g. is our response returned as an XML data?) Remember that a Web Service can be written on a variety of platforms not just Microsoft, because it uses common standards like HTTP/HTTPS, XML and SOAP to communicate. The test environment may need to cross system (internet/extranet) borders. Have we tested the corresponding publish & discovery interfaces of the Web Service? Have we tested that the Web Service requests result in the relevant changes to the database?

- Stress Testing: One of the key factors for success is, understanding whom the clients are both technically and from a business perspective. We need to look to automated tools to assist, because the stress test cannot be conducted manually, The automated environment needs to recreate the client communication (via HTTP, SOAP or LAN) so that we stress the correct execution paths through the system. The simulated clients must also provide the right business scenarios so that we create the mix (enquiries vs. updates) and the load (peak times vs. quiet times) required.

- Compatibility Testing: How do we test the delivery of information against a number of dissimilar devices? Have we used the new style mobile controls supported by .NET appropriately? Can we check that the underlying core data returned by application was correct (XML) but that the transformation into the target device's presentation language (e.g. HTML or WML) was incorrect?

4.1 End To End View

Any web-based application, whether or not it uses .NET technology, is rather like an iceberg. Only a small proportion of the supporting infrastructure is actually visible to an end user. The great innovation behind the web was that it turned our processes 'inside-out' to face customers or partners. The potential downside is that the applications (reputation) are only as good as the weakest link in the chain.

4.1.1 Sample .NET application - account maintenance

The web application itself may be doing nothing more than provide account snapshots. The web page server/content resides at the ISP. We will assume the customer has dedicated 2 x 10Mb links from their ISP to connect to their own .NET application server. It utilizes Web Services to provide a variety of account lookup functions (by account id or by customer surname, postcode and house number). The client is experiencing a performance problem when the number of users on the web site is > 200, but they don't know where the cause of the problem lies.

4.1.2 Sample .NET application - the case for end-to-end testing

For this scenario the need for end-to-end testing to flush out potential hot spots in the overall infrastructure is necessary. In our sample environment, it is all too easy for various vendors (the ISP, the Content Management application, the Database Vendor) to say 'it's not me' and even to show some component stats that prove the database can 'handle 200 users'. Our suggested approach in this case might be that, at first, we look at the communications bandwidth and the interfaces between layers. By performing a series of appropriate performance tests and monitoring the behavior of the system from an end-to-end perspective, it is possible to see the big picture.

4.1.3 Sample .NET application - monitoring & statistics

The performance test may replicate real users (web-interface) at the front-end, and introduce a mix of HTTP, SOAP/XML transactions. By collecting relevant statistics from network hardware, database servers, and CPU/Memory/Swap information from the .NET CLR, we should begin to see which units are working to or beyond their capacity.

4.1.4 Sample .NET application - conclusion

In this scenario, we have highlighted just some of the potential issues that may occur in an integrated environment and an approach to solving them. To take a final example, the SOAP protocol used to drive our account lookups is probably a simple, yet slightly effusive interface to decode. If the connections between our web server and the application server are reusable, we get better performance from our network bandwidth. However, the 'system' automatically adds additional security/authorization information, if the connections are not reusable. This means that performance overall, for the same transaction load is worse because of the side effect of the security model. The system needs to send extra data over the network to accomplish the same work. What this shows is that there is a need to have a complete view of the environment. To make sense of your tests and the results requires an understanding of the overall infrastructure and the complex inter-dependencies between systems.

4.2 Testing to be easier in a .NET environment?

Many elements of testing should be simplified because the underlying architecture behind .NET is much more flexible than the ASP-based model. We have seen the launch of new, and improved, third party tools that support the .NET environment. For example, they will support the new .NET Web Controls. They will also focus on interaction with Web Services and the interrogation of component interfaces. In addition, many of the Visual Studio development tools provide 'out of the box' functionality that improves testing and debugging of .NET applications. The new CLR provides many detailed hooks that aid performance monitoring. IS Integration also expects that there will be a need to adapt existing tools to the .NET framework, by generating test harnesses that 'understand' .NET components. It will also require specialist skills to establish the appropriate HTTP, XML/SOAP interfaces required for a performance or stress test environment. .NET highlights the need for an integrated, end-to-end view of testing.

5. Tools for testing in .NET environment

Some of the tools that are available that would help us in testing .NET applications are

- FxCop FxCop is a code analysis tool that checks your .NET assemblies for conformance to the .NET Framework Design Guidelines.

- NUnit A simple testing framework for .Net languages. Implemented in C#, applies to all .Net languages. Comes with a text mode for inclusion in automated builds, plus a GUI browser. Open Source.

- TraceView TraceView is a debug utility that captures debug messages from shared memory DBWIN_BUFFER. It provides features such as trace selected processes; apply custom filters at runtime, level of tracing, log messages to log files, and persistent user settings.

- MATC (Microsoft Application Test Center) Application Center Test is designed to stress test Web servers and analyzes performance and scalability problems with Web applications, including Active Server Pages (ASP) and the components they use. Application Center Test simulates a large group of users by opening multiple connections to the server and rapidly sending HTTP requests. Application Center Test supports several different authentication schemes and the SSL protocol, making it ideal for testing personalized and secure sites. Although long-duration and high-load stress testing is Application Center Test's main purpose, the programmable dynamic tests will also be useful for functional testing. Application Center Test is compatible with all Web servers and Web applications that adhere to the HTTP protocol.

No comments:

Post a Comment